Outlier Detection#

1. Apa Itu Data Outlier?#

data outlier adalah nilai yang berbeda jauh dari mayoritas data lainnya dalam suatu set, dan keberadaannya dapat memberikan informasi yang berharga atau sebaliknya, menunjukkan adanya kesalahan atau anomali.

2. Penyebab Munculnya Data Outlier#

Memahami dan mengelola data outlier merupakan hal yang penting bagi data analyst, sebab dapat mempengaruhi hasil analisis dan model prediktif secara signifikan.

Kesalahan Pengukuran atau Entri Data

Kesalahan dalam proses pengukuran atau kesalahan saat memasukkan data dapat menghasilkan nilai yang sangat tinggi atau sangat rendah dibandingkan dengan nilai lainnya.

Misalnya, jika suhu yang seharusnya dicatat sebagai 25 derajat Celsius malah dicatat sebagai 250 derajat Celsius, maka nilai tersebut menjadi outlier.

Kesalahan ini bisa disebabkan oleh alat pengukur yang tidak kalibrasi dengan benar atau human error saat memasukkan data ke sistem.

Variasi Alami

Dalam beberapa kasus, variasi alami dari fenomena yang sedang diukur bisa menyebabkan kemunculan outliers.

Misalnya, dalam studi cuaca, kejadian cuaca ekstrem seperti badai besar atau suhu yang sangat rendah bisa menjadi outlier. Variasi alami ini adalah bagian dari data dan mencerminkan kejadian yang jarang terjadi namun mungkin penting untuk dianalisis lebih lanjut.

Kejadian Khusus atau Langka

Terkadang, data outlier muncul karena adanya kejadian yang langka atau unik yang tidak biasa terjadi dalam dataset.

Misalnya, peningkatan penjualan yang tiba-tiba karena adanya promosi besar-besaran atau diskon spesial bisa menyebabkan nilai penjualan pada bulan tersebut menjadi outlier.

Kejadian khusus ini bisa memberikan wawasan penting mengenai faktor-faktor yang mempengaruhi data.

Mengidentifikasi penyebab kemunculan outliers sangat penting dalam analisis data. Penanganan data outlier yang tepat adalah kunci untuk mendapatkan hasil analisis yang akurat dan andal.

3. Pentingnya Mendeteksi Data Outlier#

Meningkatkan Akurasi Analisis

Identifikasi Kesalahan Data

Pengambilan Keputusan yang Lebih Baik

Meningkatkan Kinerja Model Prediktif

4. Jenis Data Outlier#

Contextual Outliers (Conditional Anomalies)

Contextual outliers, atau conditional anomalies, adalah titik data yang dianggap outliers dalam konteks tertentu tetapi mungkin tidak tampak aneh secara keseluruhan. Jenis outliers ini tergantung pada informasi kontekstual tambahan seperti waktu atau lokasi.

Collective Outliers

Collective outliers adalah sekelompok titik data yang, secara individu, mungkin tidak tampak aneh, tetapi bersama-sama membentuk pola yang tidak biasa dibandingkan dengan keseluruhan dataset.

Global Outliers (Point Anomalies) Global outliers, juga dikenal sebagai point anomalies, adalah titik data yang sangat berbeda dari keseluruhan kumpulan data. Outliers jenis ini menonjol karena nilainya jauh lebih tinggi atau lebih rendah dibandingkan dengan mayoritas data lainnya.

Deteksi Outlier Menggunakan KNN#

Apa itu K-Nearest Neighbors (KNN)?#

K-Nearest Neighbors (KNN) merupakan sebuah cara untuk mengklasifikasikan dengan cara melihat sesuatu yang berada di dekatnya. KNN juga disebut dengan Algoritna oembelajar malas. Karena tidak memerlukan pembelajaran terlebih dahulu, dan langsung meyimpan data set dan pada saat klaifikasi melakukan set data. KNN bekerja dengan menggunakan kedekatan dan pemungutan suara mayoritas untuk membuat prediksi atau forecasting. Pada KNN terdapat istilah “k”. “k” merupakan angka yang memberi tahu algoritma beberapa banya titik terdekat (tetangga) yang digunakan untuk membuat keputusan. Contohnya: Misalkan menntukan nama buah dan membandingkannya dengan buah yang telah dikenal. kemudian menggunakan nilai “k” sebanyak 3 kemudian 2 dari 3 merupakan buah apel dan 1 dari 3 merupakan buah pisang. jadi algoritma ini mengatakan bahwa buah tersebut merupakan apel karena sebagian besar tetangganya apel.

Menentukan Nilai “k”#

Dalam KNN, pemilihan nilai “k” sangat penting untuk menentukan hipotesis hasil dari prediksi. Jika kumpulan data memiliki outlier atau noise yang signifikan, nilai “k” yang lebih tinggi dapat membantu memperhalus prediksi dan mengurangi data yang noise. Namun, pemilihan nilai yang tinggi dapat menyebabkan underfitting.

Pertama, kita harus menentukan nilai K terlebih dahulu. Penentuan nilai K ini tidak ada rumus pastinya. Namun satu tips yang dapat dipertimbangkan, yakni jika kelas berjumlah genap maka sebaiknya nilai K-nya ganjil, sebaliknya jika kelas berjumlah ganjil maka sebaiknya nilai K-nya genap. Dalam prakteknya di Python, Anda dapat menghitung menggunakan kode program untuk mencari nilai K terbaik dari berbagai opsi nilai (misalnya dari K=2 sampai K=10).

Hitung jarak antara data baru dan masing-masing data lainnya#

menghitung jarak menggunakan metode Euclidean distance

Jika ada lebih dari satu, kita dapat menjumlahkannya seperti di bawah ini.

Ambil tiga data dengan jarak terdekat#

Dari perhitungan Euclidean distance di atas, jika kita rangkum dari jarak terdekat

Pengumpulan Data#

Dataset IRIS adalah dataset yang berisi informasi tentang tiga spesies bunga iris (Setosa, Versicolor, Virginica) dengan fitur utama yaitu; panjang dan lebar dari sepal serta petal.

Lokasi Data#

Teruntuk lokasi dari data IRIS berada dalam aiven.io (platform cloud dan AI berbasis open source):

Data IRIS petal berada di database MySQL

Data IRIS sepal berada di database PostgreSQL

Metode Pengumpulan#

Langkah untuk mengumpulkan data dilakukan menggunakan Python sebagai berikut:

pymysql: Digunakan untuk menghubungkan dan mengambil data dari MySQL.psycopg2: Digunakan untuk menghubungkan dan mengambil data dari PostgreSQL.

####Proses Mengumpulkan Data #####a. Menghubungkan Data dari MySQL dan PostgreSQL

Install library yang diperlukan

!pip install pymysql

!pip install psycopg2-binary

Requirement already satisfied: pymysql in /usr/local/python/3.12.1/lib/python3.12/site-packages (1.1.1)

[notice] A new release of pip is available: 24.3.1 -> 25.0.1

[notice] To update, run: python3 -m pip install --upgrade pip

Requirement already satisfied: psycopg2-binary in /usr/local/python/3.12.1/lib/python3.12/site-packages (2.9.10)

[notice] A new release of pip is available: 24.3.1 -> 25.0.1

[notice] To update, run: python3 -m pip install --upgrade pip

Gunakan library berikut:

pandas: Digunakan untuk membaca, mengolah, dan menganalisis data dalam bentuk tabel (DataFrame).from sqlalchemy import create_engine: Digunakan untuk membuat koneksi ke database menggunakan SQLAlchemy, yang merupakan ORM (Object Relational Mapper) untuk Python.numpy: Digunakan untuk komputasi numerik, seperti operasi matriks, array, dan perhitungan statistik.from sklearn.neighbors import NearestNeighbors: Diambil dari pustaka scikit-learn untuk melakukan analisis berbasis algoritma K-Nearest Neighbors (KNN), biasanya digunakan dalam pencarian tetangga terdekat atau rekomendasi.scipy.spatial.distance import euclidean: Digunakan untuk menghitung jarak Euclidean antara dua titik dalam ruang multidimensi.IPython.display mport display, HTML: Digunakan untuk menampilkan tabel dalam format HTML

import pandas as pd

from sqlalchemy import create_engine

import numpy as np

from sklearn.neighbors import NearestNeighbors

from scipy.spatial.distance import euclidean

from IPython.display import display, HTML

Import Database PostgreSQL dari Aiven#

connect denga isi host, port, name, user dan password dan ambil dari tabel iris_data

import psycopg2

# koneksi ke database mysql melalui aiven.io

DB_HOST = "pg-359aec68-tugas-pendata.g.aivencloud.com"

DB_PORT = "17416"

DB_NAME = "defaultdb"

DB_USER = "avnadmin"

DB_PASS = "AVNS_oal2yP3mG6JLIwX3BUK"

connect_1 = psycopg2.connect(

host=DB_HOST,

port=DB_PORT,

dbname=DB_NAME,

user=DB_USER,

password=DB_PASS,

sslmode="require"

)

data_posgre = connect_1.cursor()

# akses ke data di database

data_posgre.execute("SELECT * FROM iris_data ORDER BY id ASC LIMIT 10;")

data_db = data_posgre.fetchall()

print("10 Data dalam tabel data_irisposgresql:")

for data in data_db:

print(data)

10 Data dalam tabel data_irisposgresql:

(1, 'Iris-setosa', 5.1, 3.5)

(2, 'Iris-setosa', 40.9, 30.0)

(3, 'Iris-setosa', 4.7, 3.2)

(4, 'Iris-setosa', 4.6, 3.1)

(5, 'Iris-setosa', 5.0, 3.6)

(6, 'Iris-setosa', 5.4, 3.9)

(7, 'Iris-setosa', 4.6, 3.4)

(8, 'Iris-setosa', 5.0, 3.4)

(9, 'Iris-setosa', 4.4, 2.9)

(10, 'Iris-setosa', 4.9, 3.1)

Import Database MySQL dari Aiven#

connect denga isi host, port, name, user dan password dan ambil dari tabel iris_data

import pymysql

# koneksi ke database mysql melalui aiven.io

DB_HOST = "mysql-3634ef1a-tugas-pendata.g.aivencloud.com"

DB_PORT = 17416

DB_NAME = "defaultdb"

DB_USER = "avnadmin"

DB_PASS = "AVNS_2NRSFWfr9pGMEI7BSpA"

connect_2 = pymysql.connect(

host=DB_HOST,

port=DB_PORT,

database=DB_NAME,

user=DB_USER,

password=DB_PASS,

ssl={'ssl': {}}

)

data_mysql = connect_2.cursor()

# ambil data ke database

data_mysql.execute("SELECT * FROM iris_data LIMIT 10;")

data_db_sql = data_mysql.fetchall()

print("10 data dalam tabel iris_data")

for data2 in data_db_sql:

print(data2)

10 data dalam tabel iris_data

(1, 'Iris-setosa', 1.4, 0.2)

(2, 'Iris-setosa', 50.0, 20.0)

(3, 'Iris-setosa', 1.3, 0.2)

(4, 'Iris-setosa', 1.5, 0.2)

(5, 'Iris-setosa', 1.4, 0.2)

(6, 'Iris-setosa', 1.7, 0.4)

(7, 'Iris-setosa', 1.4, 0.3)

(8, 'Iris-setosa', 1.5, 0.2)

(9, 'Iris-setosa', 1.4, 0.2)

(10, 'Iris-setosa', 1.5, 0.1)

Penggabungan data#

# Koneksi ke PostgreSQL dan MySQL

posgre_url = create_engine("postgresql+psycopg2://avnadmin:AVNS_oal2yP3mG6JLIwX3BUK@pg-359aec68-tugas-pendata.g.aivencloud.com:17416/defaultdb")

mysql_url = create_engine("mysql+pymysql://avnadmin:AVNS_2NRSFWfr9pGMEI7BSpA@mysql-3634ef1a-tugas-pendata.g.aivencloud.com:17416/defaultdb")

# Query ke MySQL

mysql_query = "SELECT id, class, `petal length` AS petal_length, `petal width` AS petal_width FROM iris_data;"

df_mysql = pd.read_sql(mysql_query, mysql_url)

# Query ke PostgreSQL

posgre_query = "SELECT id, class, sepal_length, sepal_width FROM iris_data;"

df_postgresql = pd.read_sql(posgre_query, posgre_url)

# Gabungkan DataFrame berdasarkan id dan class

df_merged = pd.merge(df_mysql, df_postgresql, on=["id", "class"], how="inner")

# Ambil fitur numerik

feature_columns = ["petal_length", "petal_width", "sepal_length", "sepal_width"]

data_values = df_merged[feature_columns].values

# df_merged["outlier"] = df_merged["distance"] > threshold

display(HTML(df_merged.to_html()))

| id | class | petal_length | petal_width | sepal_length | sepal_width | |

|---|---|---|---|---|---|---|

| 0 | 1 | Iris-setosa | 1.4 | 0.2 | 5.1 | 3.5 |

| 1 | 2 | Iris-setosa | 50.0 | 20.0 | 40.9 | 30.0 |

| 2 | 3 | Iris-setosa | 1.3 | 0.2 | 4.7 | 3.2 |

| 3 | 4 | Iris-setosa | 1.5 | 0.2 | 4.6 | 3.1 |

| 4 | 5 | Iris-setosa | 1.4 | 0.2 | 5.0 | 3.6 |

| 5 | 6 | Iris-setosa | 1.7 | 0.4 | 5.4 | 3.9 |

| 6 | 7 | Iris-setosa | 1.4 | 0.3 | 4.6 | 3.4 |

| 7 | 8 | Iris-setosa | 1.5 | 0.2 | 5.0 | 3.4 |

| 8 | 9 | Iris-setosa | 1.4 | 0.2 | 4.4 | 2.9 |

| 9 | 10 | Iris-setosa | 1.5 | 0.1 | 4.9 | 3.1 |

| 10 | 11 | Iris-setosa | 1.5 | 0.2 | 5.4 | 3.7 |

| 11 | 12 | Iris-setosa | 1.6 | 0.2 | 4.8 | 3.4 |

| 12 | 13 | Iris-setosa | 1.4 | 0.1 | 4.8 | 3.0 |

| 13 | 14 | Iris-setosa | 1.1 | 0.1 | 4.3 | 3.0 |

| 14 | 15 | Iris-setosa | 1.2 | 0.2 | 5.8 | 4.0 |

| 15 | 16 | Iris-setosa | 1.5 | 0.4 | 5.7 | 4.4 |

| 16 | 17 | Iris-setosa | 1.3 | 0.4 | 5.4 | 3.9 |

| 17 | 18 | Iris-setosa | 1.4 | 0.3 | 5.1 | 3.5 |

| 18 | 19 | Iris-setosa | 1.7 | 0.3 | 5.7 | 3.8 |

| 19 | 20 | Iris-setosa | 1.5 | 0.3 | 5.1 | 3.8 |

| 20 | 21 | Iris-setosa | 1.7 | 0.2 | 5.4 | 3.4 |

| 21 | 22 | Iris-setosa | 1.5 | 0.4 | 5.1 | 3.7 |

| 22 | 23 | Iris-setosa | 1.0 | 0.2 | 4.6 | 3.6 |

| 23 | 24 | Iris-setosa | 1.7 | 0.5 | 5.1 | 3.3 |

| 24 | 25 | Iris-setosa | 1.9 | 0.2 | 4.8 | 3.4 |

| 25 | 26 | Iris-setosa | 1.6 | 0.2 | 5.0 | 3.0 |

| 26 | 27 | Iris-setosa | 1.6 | 0.4 | 5.0 | 3.4 |

| 27 | 28 | Iris-setosa | 1.5 | 0.2 | 5.2 | 3.5 |

| 28 | 29 | Iris-setosa | 1.4 | 0.2 | 5.2 | 3.4 |

| 29 | 30 | Iris-setosa | 1.6 | 0.2 | 4.7 | 3.2 |

| 30 | 31 | Iris-setosa | 1.6 | 0.2 | 4.8 | 3.1 |

| 31 | 32 | Iris-setosa | 1.5 | 0.4 | 5.4 | 3.4 |

| 32 | 33 | Iris-setosa | 1.5 | 0.1 | 5.2 | 4.1 |

| 33 | 34 | Iris-setosa | 1.4 | 0.2 | 5.5 | 4.2 |

| 34 | 35 | Iris-setosa | 1.5 | 0.1 | 4.9 | 3.1 |

| 35 | 36 | Iris-setosa | 1.2 | 0.2 | 5.0 | 3.2 |

| 36 | 37 | Iris-setosa | 1.3 | 0.2 | 5.5 | 3.5 |

| 37 | 38 | Iris-setosa | 1.5 | 0.1 | 4.9 | 3.1 |

| 38 | 39 | Iris-setosa | 1.3 | 0.2 | 4.4 | 3.0 |

| 39 | 40 | Iris-setosa | 1.5 | 0.2 | 5.1 | 3.4 |

| 40 | 41 | Iris-setosa | 1.3 | 0.3 | 5.0 | 3.5 |

| 41 | 42 | Iris-setosa | 1.3 | 0.3 | 4.5 | 2.3 |

| 42 | 43 | Iris-setosa | 1.3 | 0.2 | 4.4 | 3.2 |

| 43 | 44 | Iris-setosa | 1.6 | 0.6 | 5.0 | 3.5 |

| 44 | 45 | Iris-setosa | 1.9 | 0.4 | 5.1 | 3.8 |

| 45 | 46 | Iris-setosa | 1.4 | 0.3 | 4.8 | 3.0 |

| 46 | 47 | Iris-setosa | 1.6 | 0.2 | 5.1 | 3.8 |

| 47 | 48 | Iris-setosa | 1.4 | 0.2 | 4.6 | 3.2 |

| 48 | 49 | Iris-setosa | 1.5 | 0.2 | 5.3 | 3.7 |

| 49 | 50 | Iris-setosa | 1.4 | 0.2 | 5.0 | 3.3 |

| 50 | 51 | Iris-versicolor | 4.7 | 1.4 | 7.0 | 3.2 |

| 51 | 52 | Iris-versicolor | 4.5 | 1.5 | 6.4 | 3.2 |

| 52 | 53 | Iris-versicolor | 4.9 | 1.5 | 6.9 | 3.1 |

| 53 | 54 | Iris-versicolor | 4.0 | 1.3 | 5.5 | 2.3 |

| 54 | 55 | Iris-versicolor | 4.6 | 1.5 | 6.5 | 2.8 |

| 55 | 56 | Iris-versicolor | 4.5 | 1.3 | 5.7 | 2.8 |

| 56 | 57 | Iris-versicolor | 4.7 | 1.6 | 6.3 | 3.3 |

| 57 | 58 | Iris-versicolor | 3.3 | 1.0 | 4.9 | 2.4 |

| 58 | 59 | Iris-versicolor | 4.6 | 1.3 | 6.6 | 2.9 |

| 59 | 60 | Iris-versicolor | 3.9 | 1.4 | 5.2 | 2.7 |

| 60 | 61 | Iris-versicolor | 3.5 | 1.0 | 5.0 | 2.0 |

| 61 | 62 | Iris-versicolor | 4.2 | 1.5 | 5.9 | 3.0 |

| 62 | 63 | Iris-versicolor | 4.0 | 1.0 | 6.0 | 2.2 |

| 63 | 64 | Iris-versicolor | 4.7 | 1.4 | 6.1 | 2.9 |

| 64 | 65 | Iris-versicolor | 3.6 | 1.3 | 5.6 | 2.9 |

| 65 | 66 | Iris-versicolor | 4.4 | 1.4 | 6.7 | 3.1 |

| 66 | 67 | Iris-versicolor | 4.5 | 1.5 | 5.6 | 3.0 |

| 67 | 68 | Iris-versicolor | 4.1 | 1.0 | 5.8 | 2.7 |

| 68 | 69 | Iris-versicolor | 4.5 | 1.5 | 6.2 | 2.2 |

| 69 | 70 | Iris-versicolor | 3.9 | 1.1 | 5.6 | 2.5 |

| 70 | 71 | Iris-versicolor | 4.8 | 1.8 | 5.9 | 3.2 |

| 71 | 72 | Iris-versicolor | 4.0 | 1.3 | 6.1 | 2.8 |

| 72 | 73 | Iris-versicolor | 4.9 | 1.5 | 6.3 | 2.5 |

| 73 | 74 | Iris-versicolor | 4.7 | 1.2 | 6.1 | 2.8 |

| 74 | 75 | Iris-versicolor | 4.3 | 1.3 | 6.4 | 2.9 |

| 75 | 76 | Iris-versicolor | 4.4 | 1.4 | 6.6 | 3.0 |

| 76 | 77 | Iris-versicolor | 4.8 | 1.4 | 6.8 | 2.8 |

| 77 | 78 | Iris-versicolor | 5.0 | 1.7 | 6.7 | 3.0 |

| 78 | 79 | Iris-versicolor | 4.5 | 1.5 | 6.0 | 2.9 |

| 79 | 80 | Iris-versicolor | 3.5 | 1.0 | 5.7 | 2.6 |

| 80 | 81 | Iris-versicolor | 3.8 | 1.1 | 5.5 | 2.4 |

| 81 | 82 | Iris-versicolor | 3.7 | 1.0 | 5.5 | 2.4 |

| 82 | 83 | Iris-versicolor | 3.9 | 1.2 | 5.8 | 2.7 |

| 83 | 84 | Iris-versicolor | 5.1 | 1.6 | 6.0 | 2.7 |

| 84 | 85 | Iris-versicolor | 4.5 | 1.5 | 5.4 | 3.0 |

| 85 | 86 | Iris-versicolor | 4.5 | 1.6 | 6.0 | 3.4 |

| 86 | 87 | Iris-versicolor | 4.7 | 1.5 | 6.7 | 3.1 |

| 87 | 88 | Iris-versicolor | 4.4 | 1.3 | 6.3 | 2.3 |

| 88 | 89 | Iris-versicolor | 4.1 | 1.3 | 5.6 | 3.0 |

| 89 | 90 | Iris-versicolor | 4.0 | 1.3 | 5.5 | 2.5 |

| 90 | 91 | Iris-versicolor | 4.4 | 1.2 | 5.5 | 2.6 |

| 91 | 92 | Iris-versicolor | 4.6 | 1.4 | 6.1 | 3.0 |

| 92 | 93 | Iris-versicolor | 4.0 | 1.2 | 5.8 | 2.6 |

| 93 | 94 | Iris-versicolor | 3.3 | 1.0 | 5.0 | 2.3 |

| 94 | 95 | Iris-versicolor | 4.2 | 1.3 | 5.6 | 2.7 |

| 95 | 96 | Iris-versicolor | 4.2 | 1.2 | 5.7 | 3.0 |

| 96 | 97 | Iris-versicolor | 4.2 | 1.3 | 5.7 | 2.9 |

| 97 | 98 | Iris-versicolor | 4.3 | 1.3 | 6.2 | 2.9 |

| 98 | 99 | Iris-versicolor | 3.0 | 1.1 | 5.1 | 2.5 |

| 99 | 100 | Iris-versicolor | 4.1 | 1.3 | 5.7 | 2.8 |

| 100 | 101 | Iris-virginica | 6.0 | 2.5 | 6.3 | 3.3 |

| 101 | 102 | Iris-virginica | 5.1 | 1.9 | 5.8 | 2.7 |

| 102 | 103 | Iris-virginica | 5.9 | 2.1 | 7.1 | 3.0 |

| 103 | 104 | Iris-virginica | 5.6 | 1.8 | 6.3 | 2.9 |

| 104 | 105 | Iris-virginica | 5.8 | 2.2 | 6.5 | 3.0 |

| 105 | 106 | Iris-virginica | 6.6 | 2.1 | 7.6 | 3.0 |

| 106 | 107 | Iris-virginica | 4.5 | 1.7 | 4.9 | 2.5 |

| 107 | 108 | Iris-virginica | 6.3 | 1.8 | 7.3 | 2.9 |

| 108 | 109 | Iris-virginica | 5.8 | 1.8 | 6.7 | 2.5 |

| 109 | 110 | Iris-virginica | 6.1 | 2.5 | 7.2 | 3.6 |

| 110 | 111 | Iris-virginica | 5.1 | 2.0 | 6.5 | 3.2 |

| 111 | 112 | Iris-virginica | 5.3 | 1.9 | 6.4 | 2.7 |

| 112 | 113 | Iris-virginica | 5.5 | 2.1 | 6.8 | 3.0 |

| 113 | 114 | Iris-virginica | 5.0 | 2.0 | 5.7 | 2.5 |

| 114 | 115 | Iris-virginica | 5.1 | 2.4 | 5.8 | 2.8 |

| 115 | 116 | Iris-virginica | 5.3 | 2.3 | 6.4 | 3.2 |

| 116 | 117 | Iris-virginica | 5.5 | 1.8 | 6.5 | 3.0 |

| 117 | 118 | Iris-virginica | 6.7 | 2.2 | 7.7 | 3.8 |

| 118 | 119 | Iris-virginica | 6.9 | 2.3 | 7.7 | 2.6 |

| 119 | 120 | Iris-virginica | 5.0 | 1.5 | 6.0 | 2.2 |

| 120 | 121 | Iris-virginica | 5.7 | 2.3 | 6.9 | 3.2 |

| 121 | 122 | Iris-virginica | 4.9 | 2.0 | 5.6 | 2.8 |

| 122 | 123 | Iris-virginica | 6.7 | 2.0 | 7.7 | 2.8 |

| 123 | 124 | Iris-virginica | 4.9 | 1.8 | 6.3 | 2.7 |

| 124 | 125 | Iris-virginica | 5.7 | 2.1 | 6.7 | 3.3 |

| 125 | 126 | Iris-virginica | 6.0 | 1.8 | 7.2 | 3.2 |

| 126 | 127 | Iris-virginica | 4.8 | 1.8 | 6.2 | 2.8 |

| 127 | 128 | Iris-virginica | 4.9 | 1.8 | 6.1 | 3.0 |

| 128 | 129 | Iris-virginica | 5.6 | 2.1 | 6.4 | 2.8 |

| 129 | 130 | Iris-virginica | 5.8 | 1.6 | 7.2 | 3.0 |

| 130 | 131 | Iris-virginica | 6.1 | 1.9 | 7.4 | 2.8 |

| 131 | 132 | Iris-virginica | 6.4 | 2.0 | 7.9 | 3.8 |

| 132 | 133 | Iris-virginica | 5.6 | 2.2 | 6.4 | 2.8 |

| 133 | 134 | Iris-virginica | 5.1 | 1.5 | 6.3 | 2.8 |

| 134 | 135 | Iris-virginica | 5.6 | 1.4 | 6.1 | 2.6 |

| 135 | 136 | Iris-virginica | 6.1 | 2.3 | 7.7 | 3.0 |

| 136 | 137 | Iris-virginica | 5.6 | 2.4 | 6.3 | 3.4 |

| 137 | 138 | Iris-virginica | 5.5 | 1.8 | 6.4 | 3.1 |

| 138 | 139 | Iris-virginica | 4.8 | 1.8 | 6.0 | 3.0 |

| 139 | 140 | Iris-virginica | 5.4 | 2.1 | 6.9 | 3.1 |

| 140 | 141 | Iris-virginica | 5.6 | 2.4 | 6.7 | 3.1 |

| 141 | 142 | Iris-virginica | 5.1 | 2.3 | 6.9 | 3.1 |

| 142 | 143 | Iris-virginica | 5.1 | 1.9 | 5.8 | 2.7 |

| 143 | 144 | Iris-virginica | 5.9 | 2.3 | 6.8 | 3.2 |

| 144 | 145 | Iris-virginica | 5.7 | 2.5 | 6.7 | 3.3 |

| 145 | 146 | Iris-virginica | 5.2 | 2.3 | 6.7 | 3.0 |

| 146 | 147 | Iris-virginica | 5.0 | 1.9 | 6.3 | 2.5 |

| 147 | 148 | Iris-virginica | 5.2 | 2.0 | 6.5 | 3.0 |

| 148 | 149 | Iris-virginica | 5.4 | 2.3 | 6.2 | 3.4 |

| 149 | 150 | Iris-virginica | 5.1 | 1.8 | 5.9 | 3.0 |

| 150 | 151 | ? | 5.1 | 3.2 | 5.8 | 1.0 |

Program untuk mencari KNN#

import pandas as pd

from tabulate import tabulate

k = 3 # jumlah tetangga yang kamu mau

hasil = []

for i in range(len(df_merged)):

jarak = []

for j in range(len(df_merged)):

if i != j: # supaya tidak membandingkan diri sendiri

total = ((df_merged.loc[i, 'petal_length'] - df_merged.loc[j, 'petal_length'])**2 +

(df_merged.loc[i, 'petal_width'] - df_merged.loc[j, 'petal_width'])**2 +

(df_merged.loc[i, 'sepal_length'] - df_merged.loc[j, 'sepal_length'])**2 +

(df_merged.loc[i, 'sepal_width'] - df_merged.loc[j, 'sepal_width'])**2)**0.5

jarak.append(total)

jarak.sort() # urutkan jarak

avg_distance = sum(jarak[:k]) / k # ambil k tetangga terdekat

hasil.append(avg_distance)

df_merged['avg_distance'] = hasil

# Deteksi outlier (misalnya, data dengan jarak rata-rata > threshold tertentu)

threshold = df_merged['avg_distance'].quantile(0.9999) # ambil threshold 99% persentil

outliers = df_merged[df_merged['avg_distance'] > threshold]

# Menampilkan semua baris hasil perhitungan KNN

print("Hasil perhitungan jarak rata-rata (KNN):")

print(tabulate(df_merged[['id', 'petal_length', 'petal_width', 'sepal_length', 'sepal_width', 'avg_distance']],

headers='keys', tablefmt='grid'))

Hasil perhitungan jarak rata-rata (KNN):

+-----+------+----------------+---------------+----------------+---------------+----------------+

| | id | petal_length | petal_width | sepal_length | sepal_width | avg_distance |

+=====+======+================+===============+================+===============+================+

| 0 | 1 | 1.4 | 0.2 | 5.1 | 3.5 | 0.127614 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 1 | 2 | 50 | 20 | 40.9 | 30 | 63.2578 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 2 | 3 | 1.3 | 0.2 | 4.7 | 3.2 | 0.216982 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 3 | 4 | 1.5 | 0.2 | 4.6 | 3.1 | 0.179411 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 4 | 5 | 1.4 | 0.2 | 5 | 3.6 | 0.162611 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 5 | 6 | 1.7 | 0.4 | 5.4 | 3.9 | 0.346209 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 6 | 7 | 1.4 | 0.3 | 4.6 | 3.4 | 0.262727 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 7 | 8 | 1.5 | 0.2 | 5 | 3.4 | 0.138209 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 8 | 9 | 1.4 | 0.2 | 4.4 | 2.9 | 0.25255 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 9 | 10 | 1.5 | 0.1 | 4.9 | 3.1 | 0.057735 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 10 | 11 | 1.5 | 0.2 | 5.4 | 3.7 | 0.227614 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 11 | 12 | 1.6 | 0.2 | 4.8 | 3.4 | 0.243352 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 12 | 13 | 1.4 | 0.1 | 4.8 | 3 | 0.173205 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 13 | 14 | 1.1 | 0.1 | 4.3 | 3 | 0.302529 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 14 | 15 | 1.2 | 0.2 | 5.8 | 4 | 0.476358 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 15 | 16 | 1.5 | 0.4 | 5.7 | 4.4 | 0.50824 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 16 | 17 | 1.3 | 0.4 | 5.4 | 3.9 | 0.364755 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 17 | 18 | 1.4 | 0.3 | 5.1 | 3.5 | 0.138209 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 18 | 19 | 1.7 | 0.3 | 5.7 | 3.8 | 0.396001 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 19 | 20 | 1.5 | 0.3 | 5.1 | 3.8 | 0.175931 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 20 | 21 | 1.7 | 0.2 | 5.4 | 3.4 | 0.314466 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 21 | 22 | 1.5 | 0.4 | 5.1 | 3.7 | 0.21044 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 22 | 23 | 1 | 0.2 | 4.6 | 3.6 | 0.495925 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 23 | 24 | 1.7 | 0.5 | 5.1 | 3.3 | 0.27958 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 24 | 25 | 1.9 | 0.2 | 4.8 | 3.4 | 0.362159 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 25 | 26 | 1.6 | 0.2 | 5 | 3 | 0.2 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 26 | 27 | 1.6 | 0.4 | 5 | 3.4 | 0.215738 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 27 | 28 | 1.5 | 0.2 | 5.2 | 3.5 | 0.141421 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 28 | 29 | 1.4 | 0.2 | 5.2 | 3.4 | 0.141421 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 29 | 30 | 1.6 | 0.2 | 4.7 | 3.2 | 0.179411 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 30 | 31 | 1.6 | 0.2 | 4.8 | 3.1 | 0.162611 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 31 | 32 | 1.5 | 0.4 | 5.4 | 3.4 | 0.294281 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 32 | 33 | 1.5 | 0.1 | 5.2 | 4.1 | 0.355662 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 33 | 34 | 1.4 | 0.2 | 5.5 | 4.2 | 0.364755 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 34 | 35 | 1.5 | 0.1 | 4.9 | 3.1 | 0.057735 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 35 | 36 | 1.2 | 0.2 | 5 | 3.2 | 0.290499 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 36 | 37 | 1.3 | 0.2 | 5.5 | 3.5 | 0.315963 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 37 | 38 | 1.5 | 0.1 | 4.9 | 3.1 | 0.057735 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 38 | 39 | 1.3 | 0.2 | 4.4 | 3 | 0.195457 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 39 | 40 | 1.5 | 0.2 | 5.1 | 3.4 | 0.127614 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 40 | 41 | 1.3 | 0.3 | 5 | 3.5 | 0.162611 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 41 | 42 | 1.3 | 0.3 | 4.5 | 2.3 | 0.702252 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 42 | 43 | 1.3 | 0.2 | 4.4 | 3.2 | 0.241202 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 43 | 44 | 1.6 | 0.6 | 5 | 3.5 | 0.268137 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 44 | 45 | 1.9 | 0.4 | 5.1 | 3.8 | 0.382344 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 45 | 46 | 1.4 | 0.3 | 4.8 | 3 | 0.236508 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 46 | 47 | 1.6 | 0.2 | 5.1 | 3.8 | 0.21044 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 47 | 48 | 1.4 | 0.2 | 4.6 | 3.2 | 0.168817 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 48 | 49 | 1.5 | 0.2 | 5.3 | 3.7 | 0.189519 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 49 | 50 | 1.4 | 0.2 | 5 | 3.3 | 0.179411 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 50 | 51 | 4.7 | 1.4 | 7 | 3.2 | 0.344043 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 51 | 52 | 4.5 | 1.5 | 6.4 | 3.2 | 0.309071 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 52 | 53 | 4.9 | 1.5 | 6.9 | 3.1 | 0.287882 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 53 | 54 | 4 | 1.3 | 5.5 | 2.3 | 0.272076 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 54 | 55 | 4.6 | 1.5 | 6.5 | 2.8 | 0.311781 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 55 | 56 | 4.5 | 1.3 | 5.7 | 2.8 | 0.310819 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 56 | 57 | 4.7 | 1.6 | 6.3 | 3.3 | 0.354335 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 57 | 58 | 3.3 | 1 | 4.9 | 2.4 | 0.328992 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 58 | 59 | 4.6 | 1.3 | 6.6 | 2.9 | 0.268709 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 59 | 60 | 3.9 | 1.4 | 5.2 | 2.7 | 0.472272 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 60 | 61 | 3.5 | 1 | 5 | 2 | 0.496544 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 61 | 62 | 4.2 | 1.5 | 5.9 | 3 | 0.330739 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 62 | 63 | 4 | 1 | 6 | 2.2 | 0.519079 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 63 | 64 | 4.7 | 1.4 | 6.1 | 2.9 | 0.203326 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 64 | 65 | 3.6 | 1.3 | 5.6 | 2.9 | 0.46046 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 65 | 66 | 4.4 | 1.4 | 6.7 | 3.1 | 0.257959 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 66 | 67 | 4.5 | 1.5 | 5.6 | 3 | 0.295766 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 67 | 68 | 4.1 | 1 | 5.8 | 2.7 | 0.286485 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 68 | 69 | 4.5 | 1.5 | 6.2 | 2.2 | 0.437665 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 69 | 70 | 3.9 | 1.1 | 5.6 | 2.5 | 0.227576 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 70 | 71 | 4.8 | 1.8 | 5.9 | 3.2 | 0.294721 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 71 | 72 | 4 | 1.3 | 6.1 | 2.8 | 0.350746 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 72 | 73 | 4.9 | 1.5 | 6.3 | 2.5 | 0.377807 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 73 | 74 | 4.7 | 1.2 | 6.1 | 2.8 | 0.303635 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 74 | 75 | 4.3 | 1.3 | 6.4 | 2.9 | 0.275043 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 75 | 76 | 4.4 | 1.4 | 6.6 | 3 | 0.216982 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 76 | 77 | 4.8 | 1.4 | 6.8 | 2.8 | 0.336349 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 77 | 78 | 5 | 1.7 | 6.7 | 3 | 0.367568 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 78 | 79 | 4.5 | 1.5 | 6 | 2.9 | 0.25887 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 79 | 80 | 3.5 | 1 | 5.7 | 2.6 | 0.402188 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 80 | 81 | 3.8 | 1.1 | 5.5 | 2.4 | 0.204875 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 81 | 82 | 3.7 | 1 | 5.5 | 2.4 | 0.250802 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 82 | 83 | 3.9 | 1.2 | 5.8 | 2.7 | 0.229613 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 83 | 84 | 5.1 | 1.6 | 6 | 2.7 | 0.350924 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 84 | 85 | 4.5 | 1.5 | 5.4 | 3 | 0.363965 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 85 | 86 | 4.5 | 1.6 | 6 | 3.4 | 0.418896 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 86 | 87 | 4.7 | 1.5 | 6.7 | 3.1 | 0.305099 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 87 | 88 | 4.4 | 1.3 | 6.3 | 2.3 | 0.47688 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 88 | 89 | 4.1 | 1.3 | 5.6 | 3 | 0.190006 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 89 | 90 | 4 | 1.3 | 5.5 | 2.5 | 0.248316 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 90 | 91 | 4.4 | 1.2 | 5.5 | 2.6 | 0.335022 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 91 | 92 | 4.6 | 1.4 | 6.1 | 3 | 0.213807 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 92 | 93 | 4 | 1.2 | 5.8 | 2.6 | 0.216982 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 93 | 94 | 3.3 | 1 | 5 | 2.3 | 0.296425 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 94 | 95 | 4.2 | 1.3 | 5.6 | 2.7 | 0.220462 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 95 | 96 | 4.2 | 1.2 | 5.7 | 3 | 0.186525 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 96 | 97 | 4.2 | 1.3 | 5.7 | 2.9 | 0.152016 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 97 | 98 | 4.3 | 1.3 | 6.2 | 2.9 | 0.292691 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 98 | 99 | 3 | 1.1 | 5.1 | 2.5 | 0.498569 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 99 | 100 | 4.1 | 1.3 | 5.7 | 2.8 | 0.179411 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 100 | 101 | 6 | 2.5 | 6.3 | 3.3 | 0.478055 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 101 | 102 | 5.1 | 1.9 | 5.8 | 2.7 | 0.193601 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 102 | 103 | 5.9 | 2.1 | 7.1 | 3 | 0.39987 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 103 | 104 | 5.6 | 1.8 | 6.3 | 2.9 | 0.273853 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 104 | 105 | 5.8 | 2.2 | 6.5 | 3 | 0.325594 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 105 | 106 | 6.6 | 2.1 | 7.6 | 3 | 0.447149 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 106 | 107 | 4.5 | 1.7 | 4.9 | 2.5 | 0.763383 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 107 | 108 | 6.3 | 1.8 | 7.3 | 2.9 | 0.409872 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 108 | 109 | 5.8 | 1.8 | 6.7 | 2.5 | 0.591073 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 109 | 110 | 6.1 | 2.5 | 7.2 | 3.6 | 0.670128 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 110 | 111 | 5.1 | 2 | 6.5 | 3.2 | 0.340679 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 111 | 112 | 5.3 | 1.9 | 6.4 | 2.7 | 0.364914 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 112 | 113 | 5.5 | 2.1 | 6.8 | 3 | 0.29339 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 113 | 114 | 5 | 2 | 5.7 | 2.5 | 0.286938 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 114 | 115 | 5.1 | 2.4 | 5.8 | 2.8 | 0.503234 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 115 | 116 | 5.3 | 2.3 | 6.4 | 3.2 | 0.349444 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 116 | 117 | 5.5 | 1.8 | 6.5 | 3 | 0.248975 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 117 | 118 | 6.7 | 2.2 | 7.7 | 3.8 | 0.697026 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 118 | 119 | 6.9 | 2.3 | 7.7 | 2.6 | 0.618153 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 119 | 120 | 5 | 1.5 | 6 | 2.2 | 0.498007 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 120 | 121 | 5.7 | 2.3 | 6.9 | 3.2 | 0.262727 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 121 | 122 | 4.9 | 2 | 5.6 | 2.8 | 0.321373 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 122 | 123 | 6.7 | 2 | 7.7 | 2.8 | 0.428387 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 123 | 124 | 4.9 | 1.8 | 6.3 | 2.7 | 0.25957 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 124 | 125 | 5.7 | 2.1 | 6.7 | 3.3 | 0.330131 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 125 | 126 | 6 | 1.8 | 7.2 | 3.2 | 0.389866 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 126 | 127 | 4.8 | 1.8 | 6.2 | 2.8 | 0.233666 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 127 | 128 | 4.9 | 1.8 | 6.1 | 3 | 0.223071 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 128 | 129 | 5.6 | 2.1 | 6.4 | 2.8 | 0.249297 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 129 | 130 | 5.8 | 1.6 | 7.2 | 3 | 0.458642 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 130 | 131 | 6.1 | 1.9 | 7.4 | 2.8 | 0.397291 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 131 | 132 | 6.4 | 2 | 7.9 | 3.8 | 0.740949 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 132 | 133 | 5.6 | 2.2 | 6.4 | 2.8 | 0.274755 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 133 | 134 | 5.1 | 1.5 | 6.3 | 2.8 | 0.355461 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 134 | 135 | 5.6 | 1.4 | 6.1 | 2.6 | 0.559463 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 135 | 136 | 6.1 | 2.3 | 7.7 | 3 | 0.583188 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 136 | 137 | 5.6 | 2.4 | 6.3 | 3.4 | 0.35217 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 137 | 138 | 5.5 | 1.8 | 6.4 | 3.1 | 0.25789 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 138 | 139 | 4.8 | 1.8 | 6 | 3 | 0.215957 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 139 | 140 | 5.4 | 2.1 | 6.9 | 3.1 | 0.298105 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 140 | 141 | 5.6 | 2.4 | 6.7 | 3.1 | 0.285311 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 141 | 142 | 5.1 | 2.3 | 6.9 | 3.1 | 0.358182 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 142 | 143 | 5.1 | 1.9 | 5.8 | 2.7 | 0.193601 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 143 | 144 | 5.9 | 2.3 | 6.8 | 3.2 | 0.285354 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 144 | 145 | 5.7 | 2.5 | 6.7 | 3.3 | 0.287059 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 145 | 146 | 5.2 | 2.3 | 6.7 | 3 | 0.32202 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 146 | 147 | 5 | 1.9 | 6.3 | 2.5 | 0.335471 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 147 | 148 | 5.2 | 2 | 6.5 | 3 | 0.310191 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 148 | 149 | 5.4 | 2.3 | 6.2 | 3.4 | 0.367242 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 149 | 150 | 5.1 | 1.8 | 5.9 | 3 | 0.310244 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

| 150 | 151 | 5.1 | 3.2 | 5.8 | 1 | 1.98177 |

+-----+------+----------------+---------------+----------------+---------------+----------------+

print("Data yang diduga outlier:")

print(tabulate(outliers, headers='keys', tablefmt='grid'))

Data yang diduga outlier:

+----+------+-------------+----------------+---------------+----------------+---------------+----------------+

| | id | class | petal_length | petal_width | sepal_length | sepal_width | avg_distance |

+====+======+=============+================+===============+================+===============+================+

| 1 | 2 | Iris-setosa | 50 | 20 | 40.9 | 30 | 63.2578 |

+----+------+-------------+----------------+---------------+----------------+---------------+----------------+

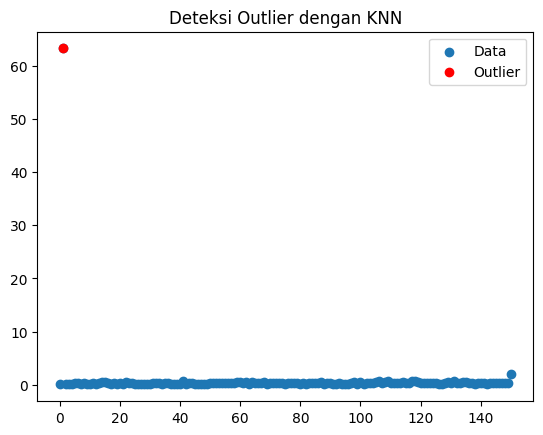

# Memilih baris yang termasuk outlier

outlier_df = df_merged[df_merged['avg_distance'] > threshold]

# Visualisasi

import matplotlib.pyplot as plt

plt.scatter(df_merged.index, df_merged['avg_distance'], label='Data')

plt.scatter(outlier_df.index, outlier_df['avg_distance'], color='red', label='Outlier')

plt.legend()

plt.title('Deteksi Outlier dengan KNN')

plt.show()

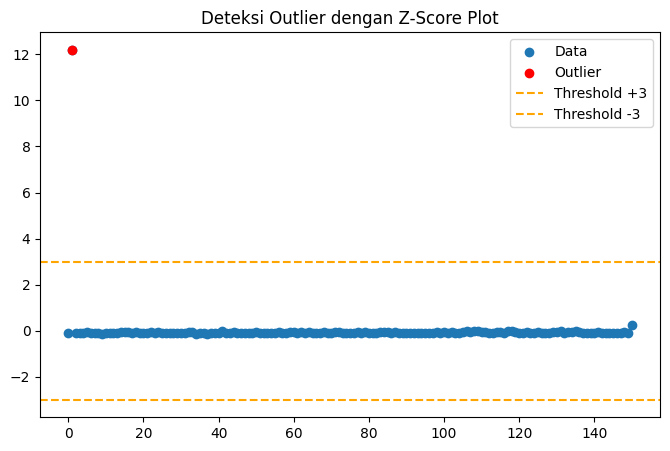

import matplotlib.pyplot as plt

import numpy as np

# Menghitung Z-Score

mean_distance = df_merged['avg_distance'].mean()

std_distance = df_merged['avg_distance'].std()

df_merged['z_score'] = (df_merged['avg_distance'] - mean_distance) / std_distance

# Menentukan outlier (Z-Score > 3 atau < -3)

outlier_df = df_merged[np.abs(df_merged['z_score']) > 3]

# Visualisasi Z-Score Plot

plt.figure(figsize=(8, 5))

plt.scatter(df_merged.index, df_merged['z_score'], label='Data')

plt.scatter(outlier_df.index, outlier_df['z_score'], color='red', label='Outlier')

plt.axhline(y=3, color='orange', linestyle='dashed', label='Threshold +3')

plt.axhline(y=-3, color='orange', linestyle='dashed', label='Threshold -3')

plt.legend()

plt.title('Deteksi Outlier dengan Z-Score Plot')

plt.show()